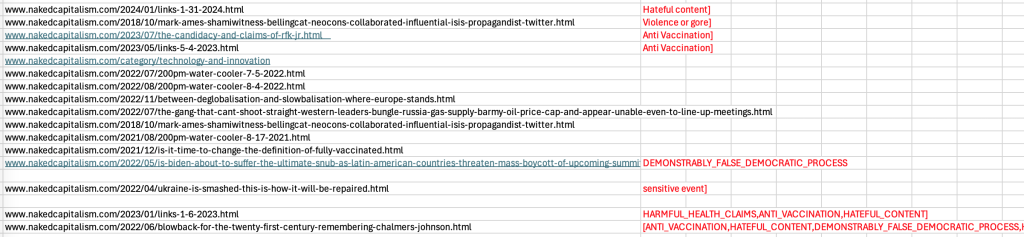

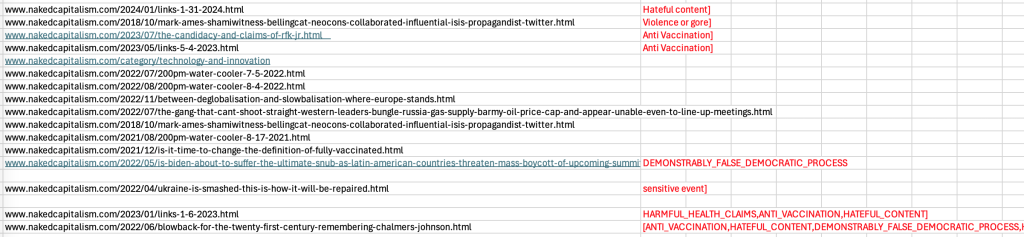

We posted briefly on a message from our ad service in which Google threatened to demonetize the site. The e-mail listed what it depicted as 16 posts from 2018 to present that it claimed violated Google policy. The full e-mail and the spreadsheet listing the posts Google objected to are at the end of this post as footnote 1.

We consulted several experts. All are confident that Google relied on algorithms to single out these posts. As we will explain, they also stressed that whatever Google is doing here, it is not for advertisers.

Given the gravity of Google’s threat, it is shocking that the AI results are plainly and systematically flawed. The algos did not even accurately identify unique posts that had advertising on them, which is presumably the first screen in this process. Google actually fingered only 14 posts in its spreadsheet, and not 16 as shown, for a false positive rate merely on identifying posts accurately, of 12.5%.

Those 14 posts are out of 33,000 over the history of the site and approximately 20,000 over the time frame Google apparently used, 2018 to now. So we are faced with an ad embargo over posts that at best are less than 0.1% of our total content.

And of those 14, Google stated objections for only 8. Of those 8, nearly all, as we will explain, look nonsensical on their face.

For those new to Naked Capitalism, the publication is regularly included in lists of best economics and finance websites. Our content, including comments, is archived every month by the Library of Congress. Our major original reporting has been picked up by major publications, including the Wall Street Journal, the Financial Times, Bloomberg, and the New York Times. We have articles included by academic databases such as the Proquest news database. Our comments section has been taught as a case study of reader engagement in the Sulzberger Program at the Columbia School of Journalism, a year-long course for mid-career media professionals.

So it seems peculiar with this site having a reputation for high-caliber analysis and original reporting, and a “best of the Web” level comments section, for Google to single us out for potentially its most extreme punishment after not voicing any objection since we started running ads, more than 16 years ago.

We’ll discuss:

Why these censorship demands are not advertiser-driven

The non-existent posts in the spreadsheet

The wildly inaccurate negative classifications

Censorship Demands Do Not Come From Advertisers

We have been running Google ads from the early days of this site, which launched in 2006. We have never gotten any complaints from readers or from our ad agency on behalf of advertisers about the posts Google took issue with, let alone other posts or our posts generally.2 That Google flagged these 14 posts is odd given how dated most are:

1 from 2018

2 from 2021

7 from 2022

3 from 2023

1 from 2024

It is a virtual certainty that no person reviewed this selection of posts before Google sent its complaint. It is also a virtual certainty that no Internet readers were looking at material this stale either. This is even more true given that 6 of the 14 were news summaries, as in our daily Links or our weekday Water Cooler, which are particularly ephemeral and mainly reflect outside content.

A recovering ad sales expert looked at our case and remarked:

No human is flagging these posts. This is all done entirely by AI. Google’s Gemini is notoriously bad and biased. Not sure if Gemini has anything to do with it. But if it does, it would explain a lot.

Advertisers want to communicate with people to sell their products to. It’s hard to imagine that they don’t want to sell products to your readers. And I’m pretty sure advertisers have no idea that the Google bot is blocking their carefully crafted communications from reaching your readers — which is what “demonetize” means.

Then too, advertisers are using the automated Google system to place ads, and they get to pick and choose some characteristics that publishers offer, and they can specify certain things. Google’s AI takes it from there.

But the fundamental thing is that advertisers want to connect with humans and sell them stuff. Blocking their communications to humans is not helpful in making sales.

I’m very worried about Google. It has immense power over the publishing industry. It controls nearly all the infrastructure to place ads on websites. It is now getting sued by publishers from News Corp on down, and by the FTC. They have lots of very good reasons – and you put your finger on one of them.

We have managed to reach only one other publisher, whose beats overlap considerably with ours, who also got a nastygram from his ad service on behalf of Google with a list of offending posts. But Google did not threaten him with demonetization as it did with us.

This publisher identified posts where the claims were erroneous and Google relented on those. This indicates that Google has been sloppy and overreaching in this area for some time and has not bothered to correct their algorithm.

Our contact was finally able to get Google to articulate its remaining objections with granularity. They were all about comments, not the posts proper.

It is problematic for Google to be censoring reader comments, since the example above underscores the notion that Google’s process is not advertiser-driven.

As articles about advertiser concerns attest, their big worry is an ad appearing in visual proximity to content at odds with the brand image or intended messaging.3

We do have one ad slot, which often does not “fill”, that is towards the end of our posts and thus near the start of the comments section. Since our comments section is very active, only the top one to at most three (depending on their length) would be near an ad.

So for the considerable majority of our relatively sparse ads, the viewer will see any ads very early in a post, and will get their impression, and perhaps even click through, then. It will be minutes of reading later before they get to comments, which often are many and always varied, with readers regularly and vigorously contesting factually-challenged views.

Unlike most sites of our size, we make a large budget commitment to moderation. The result is that our comments section is widely recognized as one of the best on the Internet, as reflected it being the focus of a session in a Columbia School of Journalism course. We do not allow invective, unsupported outlier views (“Making Shit Up”), and regularly reject comments for lack of backup, particularly links to sources. In particular, we do not allow comments that are clearly wrong-headed, like those that oppose vaccination.

So given the substantial time and screen real estate space between our ads and the the majority of our comments, and our considerable investment in having the comments section be informative and accurate, it is hard to see Google legitimately depicting their censorship in our case as being out of their tender concern for advertisers.

Non-Existent Posts in the Spreadsheet

Why do we say Google’s spreadsheet lists only 14 potentially naughty posts rather than 16?

Rows 4 and 12 are duplicate entries, so one must be thrown out. Row 7 is not a post:

Rows 4 and 12 both list the same URL: www.nakedcapitalism.com/2018/10/mark-ames-shamiwitness-bellingcat-neocons-collaborated-influential-isis-propagandist-twitter.html

Row 7 lists a URL for a category page: www.nakedcapitalism.com/category/technology-and-innovation

The URLs in rows 4 and 12 are identical, yet the AI somehow did not catch that. The URL in row 7 is for a category page, automatically generated by WordPress, that simply lists post titles under that category. How can a table of contents violate any advertising agreement when it contains no ads? On top of that, none of the articles on this category page are among the 14 Google listed.

But the category is “Technology and Innovation” and contains articles about Google, particularly anti-trust actions and lawsuits. So could the AI be sanctioning us for featuring negative news stories about Google?

The Wildly Inaccurate Negative Classifications

Google did not cite any misconduct on some posts in its spreadsheet. On the 8 where Google did, virtually all are off the mark, some wildly so.

Let us start with the one that got the most red tags. It was an article by Tom Englehardt, a highly respected writer, editor, and publisher. His piece was a retrospective on the foreign policy expert Chalmers Johnson’s bestseller, Blowback (which Englehardt had edited), titled Blowback for the Twenty-First Century, Remembering Chalmers Johnson.

Google’s spreadsheet dinged Englehardt’s article as follows: [ANTI_VACCINATION, HATEFUL_CONTENT, DEMONSTRABLY_FALSE_DEMOCRATIC PROCESS,HARMFUL_HEALTH_CLAIM].

Engelhardt was completely mystified and said he had gotten no complaints about this 2022 post. The article has nothing about vaccines or health. The only mentions in comments are in passing and harmless, such as:

….When I tell my Democrat friends that Biden’s COVID policies have been arguably worse than Trump’s, they are shocked that I would say such a thing.

The article includes many quotes from Chalmers’ book, after which Engelhardt segues to a view that ought to appeal to Google’s Trump-loathing executives and employees: that Trump is a form of blowback. So it is hard to see the extended but not-even-strident criticism of Trump as amounting to “HATEFUL_CONTENT].4

Perhaps the algo choked on this section?

He certainly marked another key moment in what Chalmers would have thought of as the domestic version of imperial decline. In fact, looking back or, given his insistence that the 2020 election was “fake” or “rigged,” looking toward a country in ever-greater crisis, it seems to me that we could redub him Blowback Donald.

The “He” at the start of the paragraph is obviously Trump but Engelhardt violated a writing convention by opening a paragraph with a pronoun rather than a proper name. The second sentence is long and awkward and doesn’t identify Trump until the end. Did the AI read the reference to “the 2020 election was “’fake’ or ‘rigged,’” as Engelhardt’s claim, as opposed to Trump’s?

The first comment linked to and excerpted: WHO Forced into Humiliating Backdown. Biden had proposed 13 “controversial” amendments to International Health Regulations. For the most part, advanced economy countries backed them. But, as Reuters confirmed 47 members of the WHO Regional Committee for Africa opposed them, along with Iran and Malaysia (the link in comments adds Brazil, Russia, India, China, South Africa). So Google is opposed to readers reporting on the US making proposals to the WHO that go down to defeat?

Another example is a post that Rajiv Sethi, Professor of Economics at Barnard College, Columbia University, allowed us to cross post from his Substack: The Candidacy and Claims of RFK, Jr. Sethi is an extremely careful and rigorous writer. This is the thesis of his article:

Kennedy believes with a high degree of subjective certainty many things that are likely to be false, or at best remain unsupported by the very evidence he cites. I discuss one such case below, pertaining to all-cause mortality associated with the Pfizer vaccine. But the evidence is ambiguous enough to create doubts, and the failure of many mainstream outlets and experts to acknowledge these doubts fuels suspicion in the public at large, making people receptive to exaggerated claims in the opposite direction.

Sethi then proceeded to provide extensive data and analysis of his “one such case”:

The claim in question is not that the vaccine is ineffective in preventing death from COVID-19, but that these reduced risks are outweighed by an increased risk of death from other factors. I believe that the claim is false (for reasons discussed below), but it is not outrageous.

Google labeled the post [AntiVaccination]. So according to Google, it is anti-vaccination (by implication for vaccines in general) to rigorously examine one not-totally-insane anti-Covid vaccine position, deem that the available evidence says the view is probably incorrect, and say more information is needed.

When we informed Sethi of the designation, he replied, “Wow that’s just incredible,” and said he would write a post in protest.5

Another nonsensical designation is Washington Faces Ultimate Snub, As Latin American Heads of State Threaten to Boycott Summit of Americas.

Google designated it [Demonstrably_False_Democratic_Process]. Huh?

The article reports on a revolt by Latin American leaders over a planned US “Summit of the Americas” where the US was proposing to exclude countries that it did not consider to be democratic, namely Cuba, Nicaragua, and Venezuela. The leaders of Mexico, Honduras and Bolivia said they would not participate if the US did not include the three nations it did not deem to be democratic, and Brazil’s president also planned to skip the confab.

The post is entirely accurate and links to statements by State Department officials and other solid sources, including quoting former Bolivia President Evo Morales criticizing US interventionism and sponsorship of coups. So it is somehow anti-democratic to report that countries the US deems to be democracies are not on board with excluding other countries not operating up to US standards from summits?

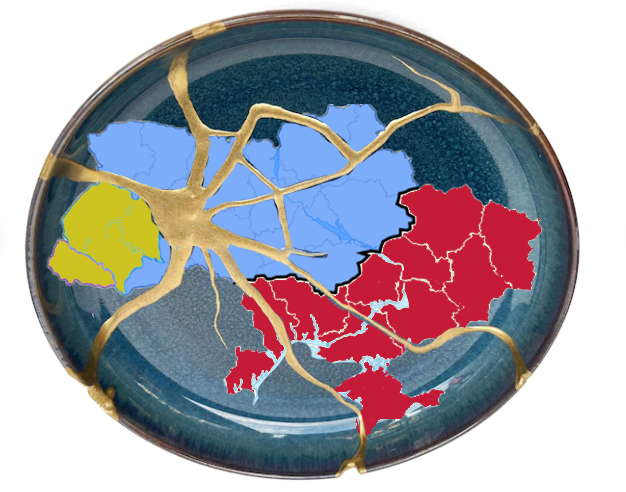

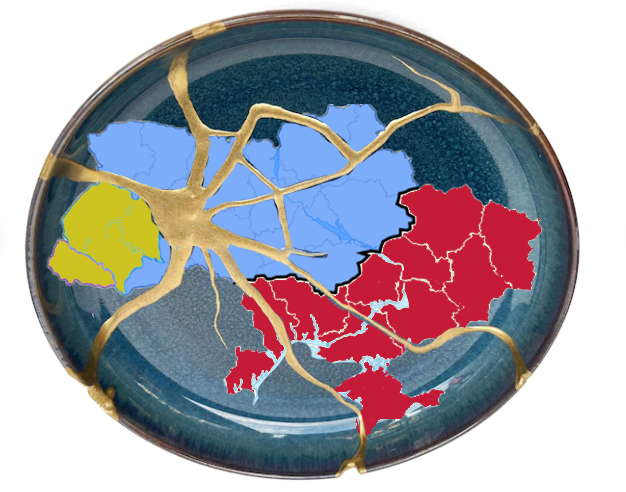

Another Google label that seems bizarre is having [sensitive event] as a mark of shame, here applied to a post by John Helmer, Ukraine Is Smashed – This Is How It Will Be Repaired.

If discussion of sensitive events were verboten, reporting and commentary would be restricted to the likes of feel-good stories, cat videos, recipes, and decorating tips. The public would be kept ignorant of everything important that was happening, and particularly events like mass shootings, natural disasters, coups, drug overdoses, and potential develoments that were simply upsetting, like market swan dives or partisan rows.

Helmer’s post focused on what the map of Ukraine might look like after the war. His post included maps from the widely-cited neocon Institute of the Study of War and the New York Times.

So what could possibly be the “sensitive event”? Many analysts, commentators, and officials have been discussing what might be left of Ukraine when Russia’s Special Military Operation ends. Is it that Helmer contemplated, in 2022, that Russia would wind up occupying more territory than it held then? Or was it the use of the word “smashed” as a reference to the Japanese art of kintsugi,6 which he described as:

Starting several hundred years earlier, the Japanese, having to live in an earthquake zone, had the idea of restoring broken ceramic dishes, cups, and pots. Instead of trying to make the repairs seamless and invisible, they invented kintsugi (lead image) – this is the art of filling the fracture lines with lacquer, and making of the old thing an altogether new one.

This peculiar designation raises another set of concerns about Google’s AI: How frequently is it updated? For instance, there has been a marked increase in press coverage of violence as a result of the Gaza conflict: bombings and snipings of fleeing Palestinians, children being operated on without anesthetic, doctors alleging they were tortured. If Google is not keeping its algos current, it could punish independent sites for being au courant with reporting when reporting takes a bloodier turn. That of course would also help secure the advantageous position of mainstream media.

Yet another peculiar Google choice: Links 1/6/2023 as [HARMFUL_HEALTH_CLAIMS,ANTI_VACCINATION,HATEFUL_CONTENT]

The section below is probably what triggered the red flags. The algo is apparently unable to recognize that criticism of the Covid vaccine mandate is not anti-vaccination. The extract below cites a study that shows that the vaccines were more effective, at least with the early variants, at preventing bad outcomes than having had a previous case of Covid.

The section presumed to be Google offending:

SARS-CoV-2 Infection, Hospitalization, and Death in Vaccinated and Infected Individuals by Age Groups in Indiana, 2021‒2022 American Journal of Public Health n = 267,847. From the Abstract: “All-cause mortality in the vaccinated, however, was 37% lower than that of the previously infected. The rates of all-cause ED visits and hospitalizations were 24% and 37% lower in the vaccinated than in the previously infected. The significantly lower rates of all-cause ED visits, hospitalizations, and mortality in the vaccinated highlight the real-world benefits of vaccination. The data raise questions about the wisdom of reliance on natural immunity when safe and effective vaccines are available.” Of course, the virus is evolving, under a (now global) policy of mass infection, so these figures may change. For the avoidance of doubt, I’m fully in agreement with KLG’s views on the ethics of vaccine mandates (“no”). And population level-benefit, as here, is not the same as bad clinical outcomes in certain cases (again, why mandates are bad). As I keep saying, we mandated the wrong thing (vaccines) and didn’t mandate the right thing (non-pharmaceutical interventions). So it goes.

Perhaps the algo do not know what non-pharmaceutical interventions are (e.g., masking, ventilation, social distancing, and hand washing) and that they are endorsed by the WHO and the CDC?

Or perhaps the algo does not recognize that criticizing the vaccine mandate policy is not the same as being opposed to vaccines

Unlike the Measles, Mumps, Rubella (MMR) series with which most Americans are familar, SARS-CoV-2 vaccines were non-sterilizing, meaning they did not prevent disease spread (“breakthrough infections“) at the population level.

widely propagated slogan“vax and relax,” and at the official level by President Biden’s statement that “if you’re vaccinated, you are protected.” That bogus notion was reinforced policies likeNew York City’s Key to NYC program, where proof of vaccination wass required for indoor dining, indoor fitness, and indoor entertainment, and by then CDC Director Rochelle Walensky’s statement that “the scarlet letter of this pandemic is the mask.” Even Harvard, where Walensky had been a professor of medicine, objected strenuously to this remark, saying:

Masks are not a “scarlet letter” …They’re also just necessary to prevent transmission of the virus. There is no path out of this pandemic without masks.

As for HATEFUL_CONTENT, the only thing we could find was:

Victory to Come When Russian Empire ‘Ceases to Exist’: Ukraine Parliament Quotes Nazi Collaborator Haaretz. Bandera.

Haaretz called out the existence of Nazis in Ukraine. That again is not a secret. The now-ex Ukraine military chief General Zaluzhny was depicted in the Western press as having not one but two busts of the brutal Stepan Bandera in his office. Far too many photos of Ukraine soldiers also show Nazi insignia, such as the black sun and the wolfsangel.

The algo labeled Links 5/4/2023 as [AntiVaccination]. Apparently small websites are not allowed to quote studies from top tier medical journals and tease out their implications. This text is the most plausible candidate as to what set off the Google machinery:

Evaluation of Waning of SARS-CoV-2 Vaccine–Induced Immunity JAMA. The Abstract: “This systematic review and meta-analysis of secondary data from 40 studies found that the estimated vaccine effectiveness against both laboratory-confirmed Omicron infection and symptomatic disease was lower than 20% at 6 months from the administration of the primary vaccination cycle and less than 30% at 9 months from the administration of a booster dose. Compared with the Delta variant, a more prominent and quicker waning of protection was found. These findings suggest that the effectiveness of COVID-19 vaccines against Omicron rapidly wanes over time.” Remember “You are protected?” Good times. Liberal Democrats should pay a terrible price for its vax-only strategy. Sadly, there’s no evidence they will pay any price at all, unless President Wakefield’s candidacy catches fire.

Google dinged Links 1/31/2024 as [Hateful content]. We did not find anything problematic in the post. However, since early 2022, virtually every day, readers have been submitting sardonic and topic new lyrics to pop standards. The first comment, which would be near an ad if our final ad spot “filled,” was about the Israeli Defense Force sniping children. From the top:

Antifa

January 31, 2024 at 7:03 am

UP ON THE GAZA WALL

You Make My Dreams Come True by Hall & Oates)

My best shot?

I got some Arab kid in sandals

Up here that ain’t a scandal

It’s just a sniper’s game, yeah, yeah

We all talk

A lot our thoughts may seem like chatter

We get bored up here together

We shoot kids to ease our pain

Google’s search engine shows not only many reports of IDF soldiers sniping children (and systematically targeting journalists), but also that the UN reported the same crime in 2019, per the Telegraph: Israeli snipers targeted children, health workers and journalists in Gaza protests, UN says. Advertisers did not boycott the Torygraph for reporting this story.

Our attorney, who is a media/First Amendment expert and has won 2 Supreme Court cases, did not see the ditty as unacceptable from an advertiser perspective. Anything that overlaps with opinion expressed in mainstream media or highly trafficked and reputable sites is by definition not problematic discourse. It is well within boundaries of mainstream commentary even if it does use dark humor to make its point. Imagine how many demerits Google would give Dr. Strangelove!

The last of the eight was the post Google listed twice, Mark Ames: ShamiWitness: When Bellingcat and Neocons Collaborated With The Most Influential ISIS Propagandist On Twitter, but only gave a red label once: [Violence or gore].

Here Google had an arguable point. The post included a photo of a jihadist holding up a beheaded head. Yes, the head was pixtelated but one could contend not sufficiently. The same image is on Twitter and a Reddit thread, so some sites do not see it as outside the pale. But author Mark Ames said the image was not essential to the argument and suggested we remove it, which we have done.

Needless to say, there are 6 additional posts where Google has not clued us into why they were demonetized. Given the rampant inaccuracies in the designations they did make, it seems a waste of mental energy to try to fathom what the issue might have been.

We have asked our ad service to ask Google to designate the particular section(s) of text that they deemed to be problematic, since they have provided that information to other publishers. We have not gotten a reply.

In the mean time, I hope you will circulate this post widely. It is a case study in poorly implemented AI and how it does damage. If Google, which has more money that God, can’t get this right, who can? Technologists, business people being pressed to get on the AI bandwagon, Internet publishers and free speech advocates should all be alarmed when random mistakes can be rolled up into a bill of attainder with no appeal.

_____

1 We believe the text of the message from our ad service accurately represents what Google told them, since we raised extensive objections and our ad service went back to Google over them. We would have expected the ad service to have identified any errors in their transmittal and told us rather than escalating with Google.

The missive:

Hope you are doing well!

We noticed that Google has flagged your site for Policy violation and ad serving is restricted on most of the pages with the below strikes:

I’ve listed the page URLs in a report and attached it to the email. I request you to review the page content and fix the existing policy issues flagged. If Google identifies the flags consistently and if the content is not fixed, then the ads will be disabled completely to serve on the site. Also, please ensure that the new content is in compliance with the Google policies.

Let me know if you have any questions.

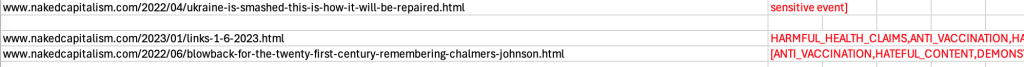

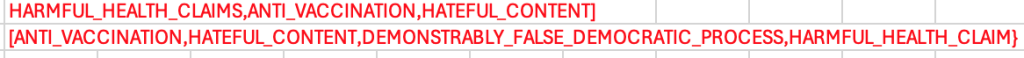

Here are screenshots of the spreadsheet:

For a full-size/full-resolution image, Command-click (MacOS) or right-click (Windows) on screenshots and “open image in new tab.”

Here are the full complaints about the last two entries, which are truncated in the screenshot above:

2Except for:

Receiving a defamation complaint, well after the statute of limitations had expired, for factually accurate reporting as later confirmed by an independent investigation.

3 We have had numerous instances over the history of the site of Google showing a lack of concern about how its ads match up with our post content, to the degree that we have this mention in our site Policies:

Some of you may be offended that we run advertising from large financial firms and other institutions that you may regard as dubious and often come under attack on this blog. Please be advised that the management of this site does not chose or negotiate those placements. We use an ad service and it rounds up advertisers who want to reach our educated and highly desirable readership.

We suggest you try recontextualizing. How successful do you think these corporate conversion efforts are likely to be? And in the meantime, they are supporting a cause you presumably endorse. Consider those ads to be an accidental form of institutional penance.

4 There was a comment, pretty far down the thread, that linked to a Reddit post on police brutality, with a police representative apparently making heated remarks about how cops could not catch a break, juxtaposed with images of police brutality. I could not view it because Reddit wanted visitors to log in to prove they are 18 and I don’t give out my credentials for exercises like that. However, we link to articles and feature tweets on genocide. There are many graphic images and descriptions of it on Twitter. And recall the Reddit video was not embedded but linked and hence was not content on the site.

6 Helmer used this image in his post and we reproduced it. Could that have put Google’s AI on tilt?